The rise of generative AI agents—autonomous entities capable of reasoning, planning, and executing actions across enterprise systems—promises unprecedented efficiency. Sales teams use them to update CRMs, finance teams use them to reconcile transactions, and IT teams deploy them for ticket triage. This shift is moving rapidly from experimentation to enterprise reality, with some estimates suggesting a majority of enterprises plan to use these agents within the next few years.

Yet, this explosive growth has a silent, dangerous counterpart: Agent Sprawl

Agent sprawl occurs when development teams and business units deploy agents across various platforms—hyperscalers, internal automation tools, or SaaS vendors—without a centralized plan or oversight. This echoes the challenges of Shadow IT or SaaS Sprawl, but with a critical difference: AI agents don’t just consume licenses; they can act autonomously, chain decisions, and interact directly with core business systems, dramatically raising the stakes for data risk, compliance, and operational stability. The core problem is fundamental: enterprises lack a cohesive, cross-platform mechanism for cataloging and tracking the data movement and actions of these autonomous assets. This visibility vacuum is generating an unprecedented data risk—a governance tsunami that demands immediate executive intervention.

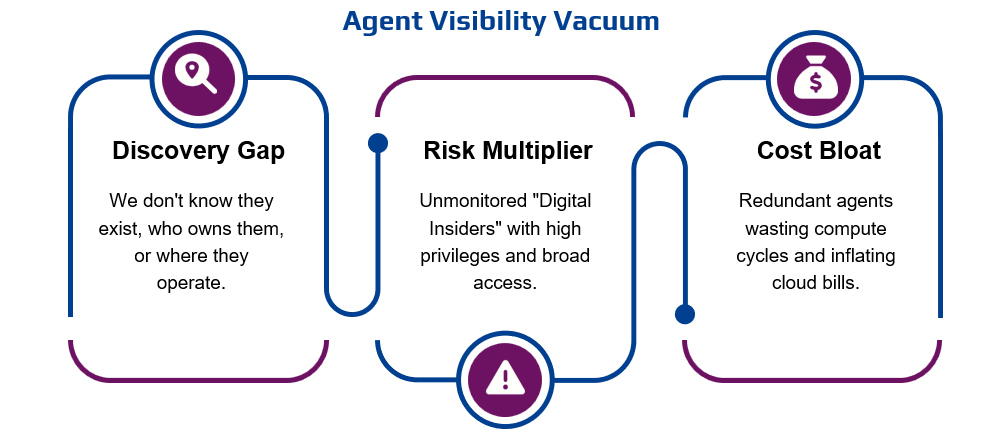

The Uncataloged Tsunami – Visibility Vacuum

The fragmentation of AI agent development leads directly to a centralized visibility vacuum, which is the root cause of the accumulating risk.

The speed of low-code and no-code agent platforms encourages rapid development in silos, resulting in an environment where duplication and chaos are nearly inevitable. Teams build agents in isolation, unaware of similar, overlapping workflows elsewhere. The discovery gap is therefore immense: the organization literally does not know how many active agents exist, who is responsible for their maintenance, or where they operate.

This lack of control is not merely a governance issue; it’s a direct source of inefficiency and multiplying risk.

First, redundancy and cost balloon as multiple agents are built to solve nearly identical problems (e.g., several different “summarize customer feedback” agents). These redundant workloads waste compute cycles and drive up infrastructure bills.

Second, and far more critical, is the risk multiplier. Uncataloged agents become unmonitored liabilities. They can bypass traditional security controls because they operate inside the perimeter using trusted credentials. An agent with overly broad permissions, or one susceptible to prompt injection attacks, becomes a mechanism for untraceable data leakage and unauthorized changes to critical systems. This uncontrolled growth creates security blind spots that security teams cannot possibly monitor without a foundational inventory.

The Critical Shift: From Analytics to Operations

The governance models that many enterprises currently rely on were built primarily for analytics needs of data. Early AI efforts focused primarily on analytical data: historical, retrospective, read-only data housed in lakehouses and warehouses. Current data catalogs and governance solutions are designed to manage these passive data assets and enforce access controls.

AI agents, however, introduce a critical shift by moving into operational systems.

Agents are no longer limited to pulling historical trends from a data lake; they are accessing and acting on real-time operational data in systems like SAP, Workday, and CRM platforms. They can trigger payments, update patient records, or modify inventory levels. This autonomy means the failure mode has fundamentally changed:

-

Risk Profile Change: Failure is no longer limited to generating an incorrect report; it can lead to catastrophic system disruption or data corruption propagation. A logic error in a credit processing agent, for example, could cascade an erroneous debt classification across multiple production systems before a human ever intervenes.

-

The Digital Insider Threat: Agents authenticate with service accounts and API keys that often grant broad permissions—making them “digital insiders”. If these credentials are compromised, or if the agent is manipulated (e.g., revealing credentials or exfiltrating data), the internal threat is immediate and severe. Managing the lifecycle and privileges of these autonomous insiders is impossible without a dedicated inventory and risk management system.

Agentic Lineage: Key to Trust, Transparency, and Adoption

The current suite of data catalogs fails precisely at the intersection of agent activity and operational data because they primarily track Authentication/Authorization (AuthZ)—who can access what —not Agentic Lineage—why the agent chose to take a specific action usingwhat.

Agentic Lineage is the requirement to trace the agent’s full decision and execution path: from the initial prompt (or trigger) through its internal logic, the LLM call, the specific API or tool call, the data source accessed, the resulting action taken, and the final output. This visibility answers the crucial question: How did the agent arrive at this autonomous decision?

Establishing this lineage is the key to unlocking trust and scalable adoption:

-

Enabling Transparency and Auditability: For compliance-heavy industries, the ability to explain autonomous actions is non-negotiable. Agentic lineage provides the necessary audit trail to answer complex governance questions, such as, “What specific data points and transformations were used by the agent when it rejected this customer’s loan application?”

-

Driving Efficiency by Detecting Overlap: Lineage makes the hidden visible. It’s the only way to audit and detect functionally redundant agents operating on different platforms, allowing the AI Leader to enforce consolidation, reduce duplicate compute, and ensure standardized results.

-

Cross-Platform Risk Awareness (The True Gap): The greatest risk lies in the cross-platform nature of agent activity. When a GCP-deployed agent reads sensitive HR data from an on-premise SAP system and uses that information to update a record in a Salesforce cloud environment, no single governance solution currently traces this end-to-end flow. Agentic lineage must provide this unified, logical map of data movement to identify cross-boundary risk.

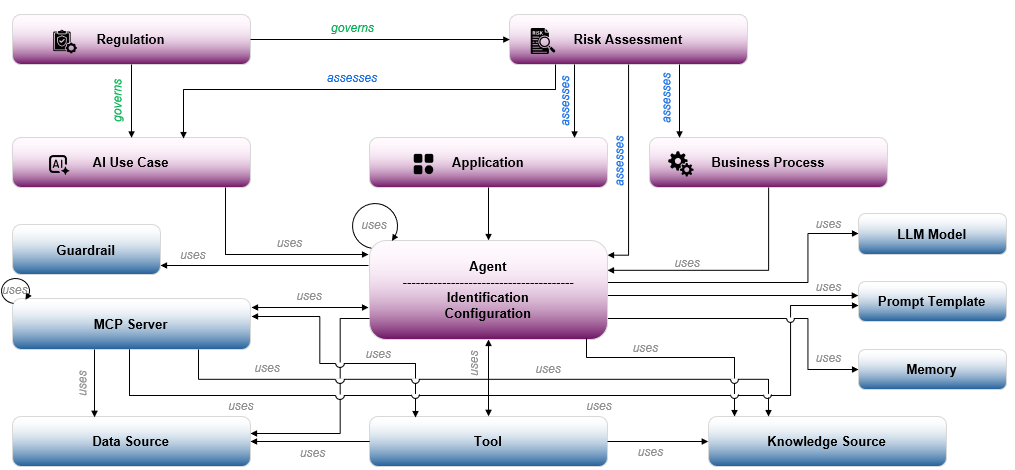

The AI Leader’s Necessity: Agent CMDB

The scale of this challenge necessitates a new category of tooling. The buyer for this solution must be the AI Leader, who understands that the agent sprawl crisis is analogous to the application management problem solved by the Configuration Management Database (CMDB).

The Agent CMDB is not merely a list of agents; it is the single source of truth for all autonomous assets, acting as a dynamic, centralized register that tracks not just who they are, but what they are allowed to do and where they operate.

The core functions of this system must go beyond passive cataloging to incorporate active,behavioral data:

-

Identity & Ownership: Tracking the agent’s name, owner, development platform, underlying LLM, and security posture. This establishes clear, non-transferrable accountability.

-

Configuration & Capabilities: Detailing the agent’s scope, which external tools (APIs) it is configured to use, and its assigned permissions (least privilege).

-

Relationships (Lineage Feed): Critically, the Agent CMDB must ingest and surface thereal-time, cross-platform Agentic Lineage data. This linkage shows data dependencies, enabling accurate change impact prediction and auditability.

By implementing an Agent CMDB fed by Agentic Lineage data, the AI Leader can transition from qualitative fear to quantitative risk management, actively tracking the Agent Risk Exposure (ARE) of every autonomous entity that touches sensitive operational data. This system transforms unmanaged chaos into a governed, scalable ecosystem.

Governing Autonomy, Unleashing Agentic Value

The cost of inaction—unchecked agent sprawl leading to unmanageable operational and compliance risks—far outweighs the investment in centralized governance. Enterprises must recognize that you cannot innovate responsibly at scale without a foundation of control.

To successfully govern autonomy and unleash the true value of AI agents, AI Leaders and data governance executives must commit to three immediate imperatives: compliance risks—far outweighs the investment in centralized governance. Enterprises must recognize that you cannot innovate responsibly at scale without a foundation of control.

-

Stop building agents in silos and recognize that every newly deployed agent is a Configuration Item (CI) that requires full lifecycle management.

-

Build the foundational layers of Agent Lifecycle Management, including discovery, inventory, and policy enforcement, championed and mandated by the AI Leader.

-

Prioritize cross-platform agent lineage. Implement solutions that capture the full decision path, from LLM prompt to operational system action, ensuring that transparency and auditability are built into the fabric of every autonomous workflow.

This strategic investment in the Agent CMDB and Agentic Lineage is not a constraint on innovation; it is the necessary prerequisite for scalable, responsible, and risk-aware AI adoption.